🔥Let's Do DevOps: Intro to AWS CodeCommit, CodePipeline, and CodeBuild with Terraform

This blog series focuses on presenting complex DevOps projects as simple and approachable via plain language and lots of pictures. You can do it!

AWS CodeCommit is one more CI/CD to enter the increasingly crowded competition for CI/CD products. AWS has provided an entire suite of products:

CodeCommit: A managed git repo. We’ll check our terraform code into a repo hosted in Codecommit. Enough said.

CodeBuild: A managed continuous integration service. It runs job definitions, dynamically spins up and down build servers, and can support your own tooling, i.e. terraform! We’ll write a deploy terraform build in CodeBuild.

CodeDeploy: A managed deployment service that helps push code from a repo to AWS services where it can be executed. This is the only

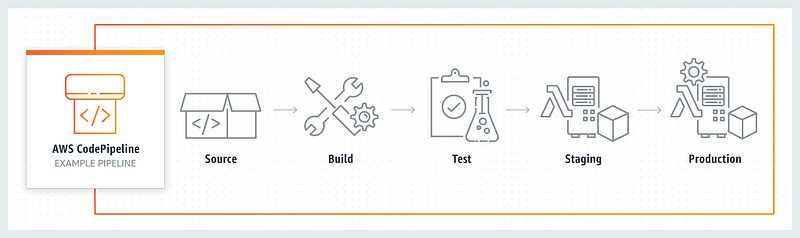

CodeXservice from AWS we won’t use.CodePipeline: A managed deployment service that supports complex deployment processes including code testing, automated deployment all the way to production. We’ll write a pipeline to automate a PR merge → terraform deploy.

As you’ll soon see from this walkthrough, or by reading over the modules source code (you can find it here), the amount of code required for this project is WAY more than any other project, specifically 1646 lines of terraform and yml config.

| AwsCodePipelineDemo % find . 2>/dev/null | xargs wc -l 2>/dev/null | grep total | |

| 1646 total |

Create an IAM user for Bootstrapping

We’ll need an IAM user with administrative access for our initial cloud bootstrapping. However, unlike other CI/CDs I’ve played with, the AWS CodeBuild service can consume an IAM role when it’s fully operational, negating the need for hard-coded administrative permissions (which if that doesn’t scare you a little, it should!). Let’s do this.

Create an IAM user and check the Programmatic access box.

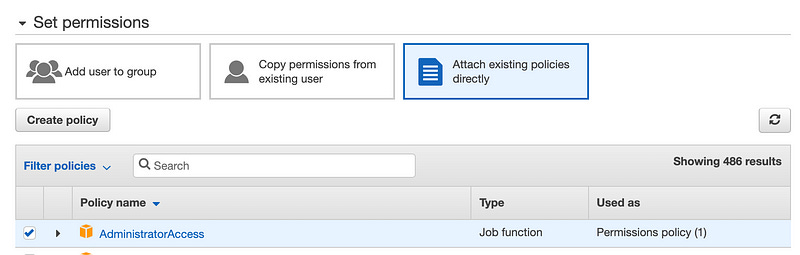

Attach the IAM user to an existing policy. We’ll give this user unfettered AdministratorAccess. That’s not a great idea for prod — it’s worth thinking about what you want this user to do. In our case, this IAM user will only be used to deploy our bootstrap. When the CodeBuild is fully deployed, it’ll assume an IAM role directly, rather than needing a hard-coded user (read: way better security).

Tags aren’t important for us now, so you can leave it blank.

Now we have an IAM user. Make sure to copy down the Secret access key — it won’t be shown again.

In your local terminal, export those values to your local shell. We’ll only be using them for the bootstrap — to deploy our CodeBuild the first time. In the future, we’ll be able to use CodeBuild to update these resources with terraform.

Before we run terraform init or terraform apply to build some resources, let’s walk through each module. I’ll talk about each step in the sequence, but there are enough inter-dependencies we’ll need to create them all at once.

The Bootstrap Module

We’re going to run our initial config from our local computer, but that’s not our end goal — we want our cloud CI/CD to pull its fair share of the weight here. The first module, called bootstrap, creates everything terraform needs to run against an ephemeral CI/CD environment. It also will build some IAM and other resources required for our AWS CodeBuild and CodePipeline services.

Find the bootstrap module in the main.tf and look for the strings in quotes. Those are names that we can customize. Notably, the s3 bucket name can’t use capital letters or many special characters and has to be globally unique (read: update it!). The other items only need to be locally unique, but feel free to customize them.

It’ll look like this:

Don’t run the terraform init or apply yet, we’ll customize all modules and run a big super build later.

The CodeCommit Module

CodeCommit is by far the simplest service, and naturally, the simplest terraform module. This module builds a hosted git repo using the CodeCommit service. This is where we’ll check in our code.

If you’d like, update the repository_name to any string you’d like.

Look at this simple little module:

| # Build AWS CodeCommit git repo | |

| resource "aws_codecommit_repository" "repo" { | |

| repository_name = var.repository_name | |

| description = "CodeCommit Terraform repo for demo" | |

| } |

The CodeBuild module

CodeBuild is the service that spins up virtual machine builders and instructs them to execute instructions. These instruction sets are called buildspec files and are written in YAML. Here’s the build spec we’ll be using for the Terraform Plan CodeBuild job.

The $TERRAFORM_VERSION variable you see if an environmental variable we’ll pass to the build from our CodeBuild environment configuration.

| version: 0.2 | |

| phases: | |

| install: | |

| runtime-versions: | |

| python: 3.7 | |

| commands: | |

| - tf_version=$TERRAFORM_VERSION | |

| - wget https://releases.hashicorp.com/terraform/"$TERRAFORM_VERSION"/terraform_"$TERRAFORM_VERSION"_linux_amd64.zip | |

| - unzip terraform_"$TERRAFORM_VERSION"_linux_amd64.zip | |

| - mv terraform /usr/local/bin/ | |

| build: | |

| commands: | |

| - terraform --version | |

| - terraform init -input=false | |

| - terraform validate | |

| - terraform plan -lock=false -input=false |

Feel free to customize the name of the Terraform Plan and Terraform Apply jobs. I went with simple here.

There is a lot going on in the CodeBuild module. Here’s the source for one of the jobs. Note the service_role which is the IAM role this CodeBuild job runs under. Figuring out these permissions took a long time and lots of iterating. Neither HashiCorp (Terraform’s builders) or AWS makes this particularly easy to figure out.

You can also see the environment information, like the type of hosts to spin up for the job, where logs are sent, as well as the artifact and source for running against, all of which is gleaned from a CodePipeline calling on this job and passing it information.

| resource "aws_codebuild_project" "codebuild_project_terraform_plan" { | |

| name = var.codebuild_project_terraform_plan_name | |

| description = "Terraform codebuild project" | |

| build_timeout = "5" | |

| service_role = var.codebuild_iam_role_arn | |

| artifacts { | |

| type = "CODEPIPELINE" | |

| } | |

| cache { | |

| type = "S3" | |

| location = var.s3_logging_bucket | |

| } | |

| environment { | |

| compute_type = "BUILD_GENERAL1_SMALL" | |

| image = "aws/codebuild/standard:2.0" | |

| type = "LINUX_CONTAINER" | |

| image_pull_credentials_type = "CODEBUILD" | |

| environment_variable { | |

| name = "TERRAFORM_VERSION" | |

| value = "0.12.16" | |

| } | |

| } | |

| logs_config { | |

| cloudwatch_logs { | |

| group_name = "log-group" | |

| stream_name = "log-stream" | |

| } | |

| s3_logs { | |

| status = "ENABLED" | |

| location = "${var.s3_logging_bucket_id}/${var.codebuild_project_terraform_plan_name}/build-log" | |

| } | |

| } | |

| source { | |

| type = "CODEPIPELINE" | |

| buildspec = "buildspec_terraform_plan.yml" | |

| } | |

| tags = { | |

| Terraform = "true" | |

| } | |

| } |

The CodePipeline Module

CodePipeline is a stitching-together DevOps tool. It allows you to generate workflows that grab sources from multiple places (including non-native AWS locations, like BitBucket or GitHub), send that info to builds, do manual approvals, and all sorts of other cool stuff I’m still discovering.

What we’re doing in this module is to build:

An S3 bucket for the temporary artifact storage (read: code) pulled out of the git repo

An IAM role that permits CodePipeline to assume it

An IAM policy that permits CodePipeline to run — it has a lot of permissions

The actual CodePipeline with every step, including downloading the source code from the CodeCommit repo (as well as watching the repo and triggering on changes), running a Terraform Plan CodeBuild stage based on the code, hanging for manual approval of changes, then once approved continuing onto a Terraform Apply CodeBuild stage

There’s clearly a ton here — here’s the config for the CodePipeline. There’s not a lot of steps of configuration, but the AWS documentation for these stages isn’t great, and I had to iterate a dozen or so times to figure out compatible categories, providers, and configurations for each step. I ended up basically reading the API docs and guessing how Terraform would abstract them. If you’re building this yourself, hopefully, this code snipped can save you some pain.

| resource "aws_codepipeline" "tf_codepipeline" { | |

| name = var.tf_codepipeline_name | |

| role_arn = aws_iam_role.tf_codepipeline_role.arn | |

| artifact_store { | |

| location = aws_s3_bucket.tf_codepipeline_artifact_bucket.bucket | |

| type = "S3" | |

| } | |

| stage { | |

| name = "Source" | |

| action { | |

| name = "Source" | |

| category = "Source" | |

| owner = "AWS" | |

| provider = "CodeCommit" | |

| version = "1" | |

| output_artifacts = ["SourceArtifact"] | |

| configuration = { | |

| RepositoryName = var.terraform_codecommit_repo_name | |

| BranchName = "master" | |

| } | |

| } | |

| } | |

| stage { | |

| name = "Terraform_Plan" | |

| action { | |

| name = "Terraform-Plan" | |

| category = "Build" | |

| owner = "AWS" | |

| provider = "CodeBuild" | |

| input_artifacts = ["SourceArtifact"] | |

| version = "1" | |

| configuration = { | |

| ProjectName = var.codebuild_terraform_plan_name | |

| } | |

| } | |

| } | |

| stage { | |

| name = "Manual_Approval" | |

| action { | |

| name = "Manual-Approval" | |

| category = "Approval" | |

| owner = "AWS" | |

| provider = "Manual" | |

| version = "1" | |

| } | |

| } | |

| stage { | |

| name = "Terraform_Apply" | |

| action { | |

| name = "Terraform-Apply" | |

| category = "Build" | |

| owner = "AWS" | |

| provider = "CodeBuild" | |

| input_artifacts = ["SourceArtifact"] | |

| version = "1" | |

| configuration = { | |

| ProjectName = var.codebuild_terraform_apply_name | |

| } | |

| } | |

| } | |

| } |

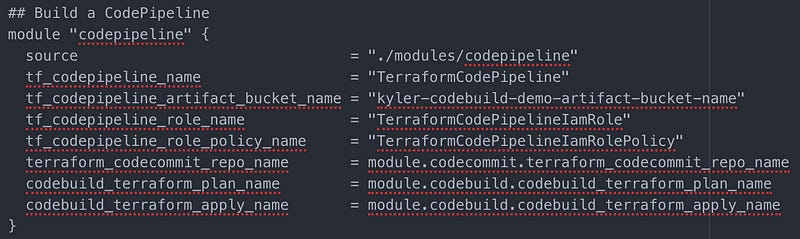

Here’s the configuration snipped from the main.tf. Feel free to customize any of the quotes strings. You have to customize the S3 bucket used to store artifacts — it has to be globally unique (and lower-case, and no special characters).

It’s Showtime!

Run a terraform init to initialize the repo. Note that for now, we have the terraform S3 backend commented out — since the back-end doesn’t exist yet, we want to run all our changes locally. Also, the AWS provider has an “assume role” (used to assume an all-powerful administrative role when running from AWS) that we can’t use yet — that role also doesn’t exist yet, so it’s commented out.

| terraform { | |

| required_version = ">=0.12.16" | |

| /* | |

| backend "s3" { | |

| bucket = "kyler-codebuild-demo-terraform-tfstate" | |

| key = "terraform.tfstate" | |

| region = "us-east-1" | |

| dynamodb_table = "codebuild-dynamodb-terraform-locking" | |

| encrypt = true | |

| } | |

| */ | |

| } | |

| # Download any stable version in AWS provider of 2.36.0 or higher in 2.36 train | |

| provider "aws" { | |

| region = "us-east-1" | |

| version = "~> 2.36.0" | |

| /* | |

| assume_role { | |

| # Remember to update this account ID to yours | |

| role_arn = "arn:aws:iam::718626770228:role/TerraformAssumedIamRole" | |

| session_name = "terraform" | |

| } | |

| */ | |

| } |

Let’s run terraform apply to verify our terraform config is all valid and your creds have been accepted. If you see a plan to build lots of items, you’re probably good to go. Answer yes and hit enter and terraform will start building.

Terraform will build all the items it can. If it can’t, it’ll give you some error messages. Since this config works, the errors are probably around names that are invalid, particularly the S3 bucket names. If you left the names to what I provided, my bucket already exists, so the AWS API will report an error — just update the bucket name. It’ll complain also if the name doesn’t fit the required format — the errors are very clear, so read carefully and you’ll be able to fix the problem.

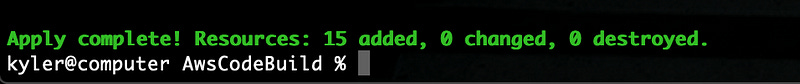

Hopefully, Terraform will report a happy state as seen below that both resources were successfully built.

Now that our S3 bucket and TF locking DynamoDB are ready, let’s pivot our state to them. In our terraform provider config, uncomment (remove the /*) at the head of our backend "s3" block and the */ at the tail end. When you’re done it’ll look like the below snapshot.

Make sure the bucket name and DynamoDB table name you used are set here — they have to match the resources you just built for this to work properly!

Run terraform init once more and terraform will note the new backend, and ask if you want to move your local state to there. Heck yes, we do — a remote state enables teams to work on the same infrastructure much more reliably than emailing around a state file, not to mention concurrently — the locking DynamoDB will prevent team members from executing terraform apply at the same time and tripping one another up. Type yes and hit enter.

Hopefully, the initialization goes well, and you’ll see a green success message.

If you see the above success message, you have successfully pivoted your Terraform state and locking to the cloud. Awesome! Now let’s get CodePipeline going

CodeCommit — It’s Simple… Right?

I didn’t expect a hosted git repo to consume any significant part of my time writing this. Git is open-source, and one of the more mature technologies of the modern web, so a hosted server by one of the biggest cloud players should be simple and intuitive.

However, that’s not the case. Pushing code from your local comp to a git repo requires an SSH key, and SSH keys can’t be added by the root user in an account. For example, the user you’re logged into your AWS account as right now.

Rather, you need to create an IAM user and put the public part of your SSH key under that user. Or cheat, as I did in this case, and put my public SSH key under the CodeBuild IAM root user we already created. In an enterprise environment, this is a bad idea — these creds have root access to this account. But in our lab, heck yeah.

First, open up the IAM panel, Users, and find the CodeBuild user we built by hand.

Open up the user and find the Security credentials tab.

Look for the SSH keys for AWS CodeCommit section and click the Upload SSH public key button.

AWS will show you a text field for you to enter the text of your public key.

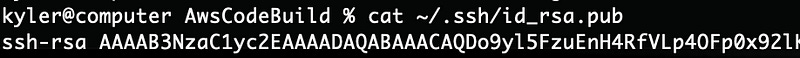

It’s likely you already have an RSA public key. You can test by running this command: cat ~/.ssh/id_rsa.pub. If you get a response, that’s the public key you should use.

If not, you need to generate an RSA key using the command ssh-keygen (on a mac or Linux). Then run the cat command again to get your public key.

Copy the whole string into the SSH public key box and hit Upload SSH public key.

If all went well, AWS will accept the public key and show the key ID here with a status of Active.

And if this were any other git server, we’d be done. However, Amazon requires we also send a username with our SSH key. The username isn’t the name… of our user, like would make any semblance of sense, it’s the SSH key ID displayed above. You can either specify it every time you run a git command (ugh), or you can specify it in an .ssh

If you like vi, run this command:vi ~/.ssh/config to create (if not there) and edit the file to have the following host entry. You can also use any other text editor to create/update the file.

Host git-codecommit.*.amazonaws.com

User (Your SSH public key ID)

Then save the file, and your git should now work. FINALLY!

Push our code to CodeCommit

In order to see our CodePipeline in action, we need to push our code up. With the SSH key in place, let’s do that.

Head over to the CodeCommit web portal, and find your repo. Over on the right side, click on the SSH Clone URL button to copy it to your clipboard. Then get a CLI session going wherever you have your main.tf file stored.

Initialize your git repo, then add all your files to the staging area and then into a commit. Run git remote add origin and then the SSH string you copied above to tell git where to attempt to push files. Then git push origin master to push everything up to our CodeCommit repo. If all goes well it’ll look like the below:

Okay, Showtime Really This Time

Remember, our CodePipeline is watching our repo and will detect this change within a minute or two. Head over to the CodePipeline console and you can see that our pipeline is in progress.

Click into the pipeline and you can watch the steps execute. If you see an error or want to see the CLI output from our YML buildspec, click on Details.

Scroll all the way down to the bottom to see our status. If it looks like the below, and you see Terraform’s Infrastructure is up-to-date message then everything has gone well and you should buy a lottery ticket! If you see errors, read them closely. The first time I pushed this for take-pictures purposes, I forgot to uncomment the AWS provider assume_role block and Terraform reported the error pretty clearly.

Click back into the pipeline and if all went well, we’re on the Manual_Approval step. The logic here is terraform ran a plan and needs confirmation from a human before it continues on to building resources. Hit the “Review” button to take an action.

The Review section allows us to leave a comment. Hit “Approve” if you’d like to see the Terraform_Apply step run. Otherwise, hit Reject to end this pipeline run.

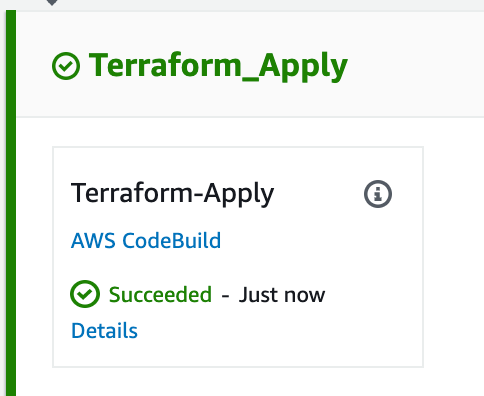

I hit Approve, and can see that the Terraform_Apply step succeeded. Hit details to read any CLI output from the run.

Now that all is live, feel free to push terraform code changes to this repo. Add a VPC module, build some hosts, anything you’d like. Terraform is now fully capable of building any resource you’d like.

To destroy your lab to avoid any charges, comment out the remote_backend block in the terraform provider, and the assume_role block in the AWS provider, then run terraform init (say yes to copying state back to local), then terraform destroy.

Summary

The AWS Development and CI/CD toolkit seems highly customizable, and each component can (must be) locked down via IAM policies. This both creates a highly secure environment and a lot of headaches for those responsible for building and maintaining it over time (boo!).

The amount of configuration and time required to build these features, tie them together in a meaningfully secure way, and even to push code to a repo greatly eclipses every other project I’ve worked with. The only one that comes close is Azure DevOps. There can be a great strength in the complexity available to you — you can build bespoke pipelines that do EXACTLY what you’re looking for.

But the complexity here is only somewhat that. A great deal of complexity is simply the byproduct of platform immaturity. Here’s hoping some time and magic AWS dust will further develop this platform into a juggernaut in the space, as they have with so many other cloud technologies.

The source code for everything is hosted here:

KyMidd/AwsCodePipelineDemo

You can't perform that action at this time. You signed in with another tab or window. You signed out in another tab or…github.com

If you’re interested in reading about other CI/CDs I’ve built up, please visit my author page.

Thanks all. Good luck out there!

kyler