🔥Let’s Do DevOps: Build and Test Docker with AWS Batch Jobs

This blog series focuses on presenting complex DevOps projects as simple and approachable via plain language and lots of pictures. You can…

This blog series focuses on presenting complex DevOps projects as simple and approachable via plain language and lots of pictures. You can do it!

Hey all!

I recently saw an opportunity for automation while working with a developer. The developer described their very manual process of building and testing docker containers. The process basically goes like this:

Update any necessary files, scripts, Dockerfile, etc.

Build the Dockerfile

Tag the docker image

Authenticate to the AWS Elastic Container Registry (ECR)

Push the docker image to the ECR

Go to the AWS console and run several AWS Batch (compute on demand) jobs, monitor their progress for 10–30 minutes, and make sure they succeed

As you can see, that’s a lot of manual steps! The team I worked with only had one person who was connected to this process enough to run it, so if that single person was at lunch, no one could update the Docker image, or test everything.

The request was to automate this entire process and make it simple enough that the rest of the team could complete this process without advanced knowledge of Docker, ECR, or Batch.

I ❤ to automate things, let’s do this.

Process: PR Build Validation

As anyone who has tried to automate something can tell you, it’s 50% tooling and 50% process. It’s important for us to understand exactly how this process works so we can map it to automated steps.

In this case, we should absolutely use pull requests. This process is important for two reasons — one, it permits peer review. Two, it permits us to create an artificial blocker for new code, and requires it to be thoroughly tested before it’s used in any environment, and certainly in production.

Given that, our process will look like this:

All code will be committed to a git repo, and changes will be managed with pull requests

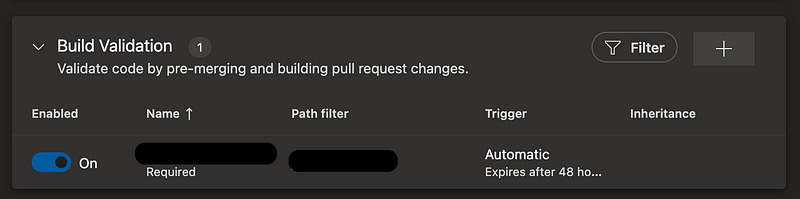

When a pull request is opened, we’ll use the Azure DevOps branch “build validation” to trigger a pipeline.

This build validation will use an internal host to provide compute and will:

- Download the PR branch version of the code

- Build the Docker image

- Map the local Docker image to the ECR with the :latest tag

- Utilize an IAM permission to authenticate to ECR, and route that authentication to Docker

- Push the Docker image to the ECR

- Kick off some Batch compute jobs and monitor their status. If any fail, fail the pipelineIf all succeeds, the PR will pass our build validation and qualify to be merged. Peer reviews will still be required, but once those are provided, the code can be merged to main

Other higher envs read only from the main/master branch, and can then be kicked off individually by hand (and gated by environment owner approval) to deploy the new image to that environment

All of this will be automated within our CI/CD, and not require any manual steps, which is a HUGE step forward from where we were before.

Let’s walk through how each step was automated.

Create a Repo and Set Build Validation Policy

I love this feature of Azure DevOps. Most CI platforms have similar functionality, where you can set a pipeline that must succeed before the PR is permitted to merge.

We built this team a repo in Azure DevOps and updated the main/master branch policy to have an automated Build Validation job when new code is proposed against this branch, and require that it completes successfully to qualify code to merge.

This is first process-wise, but you won’t be able to set this until we’ve created and imported our YAML pipeline. Let’s cover that now.

Docker Build, Tag, Push

Before we do any cool Batch jobs and monitoring, we need to build a pipeline YAML file to tell our CI/CD what to do with the code. That includes building the docker file, tagging it to a remote ECR, authenticating to the ECR, then pushing the image.

There are of course bespoke tasks within Azure DevOps provided by the community or maintained by Docker which can do these steps, but I’m a huge proponent of Do It Yourself (tm) by using bash or any other CLI. If you want ultimate flexibility and the ability to truly understand what’s happening, DIY.

You can put these into a single step, but I like to separate long-running or distinct jobs from one another so it’s easier for automation consumers (the folks running this pipeline in the future) to see exactly what’s happening.

You can also see some repeated variables — any string that MUST align, might as well be a variable, right? That way the pipeline always works right. E.g., $(docker_tag1).

| - task: Bash@3 | |

| displayName: Build Docker Images | |

| inputs: | |

| targetType: inline | |

| workingDirectory: $(docker_dest_config_directory) | |

| failOnStderr: true | |

| script: | | |

| docker build -f Dockerfile -t $(docker_tag1) . | |

| - task: Bash@3 | |

| displayName: Copy Docker Image to ECR | |

| inputs: | |

| targetType: inline | |

| workingDirectory: $(docker_dest_config_directory) | |

| failOnStderr: true | |

| script: | | |

| docker tag $(docker_tag1):latest $(ecr_account_id).dkr.ecr.us-east-1.amazonaws.com/$(docker_tag1):latest | |

| /usr/local/bin/aws ecr get-login-password | docker login --username AWS --password-stdin https://$(ecr_account_id).dkr.ecr.us-east-1.amazonaws.com 2>&1 | |

| docker push $(ecr_account_id).dkr.ecr.us-east-1.amazonaws.com/$(docker_tag1):latest |

Check out this line also, which is pretty awesome. It uses the AWS CLI to get-login-password for an ECR. Your host will need to be authenticated to AWS already or have an assigned IAM role with permissions to auth to ECR. It uses the output of that commands to pipe into docker login in the us-east-1 region and suppresses all stderr outputs.

/usr/local/bin/aws ecr get-login-password | docker login --username AWS --password-stdin https://$(ecr_account_id).dkr.ecr.us-east-1.amazonaws.com 2>&1Which is pretty awesome, right? We’re also going to include these final two steps in our pipeline. The first, Trigger Batch Testing Jobs, kicks off a bash script I wrote to kick off and monitor AWS Batch — we’ll cover that int he next section.

The last task cleans up any files we downloaded from our CI to run this job. This is a great security practice since you might download passwords or other sensitive files as part of our automation. Note the line that says condition: always() which means this step runs in every circumstance — if you cancel the job, it’ll still run. If the job fails at the above step, it’ll still run. Make sure you’re careful with the command there rm -rf (path) since it will recursively and forcefully delete any files in a folder.

| - task: Bash@3 | |

| displayName: Trigger Batch Testing Jobs | |

| inputs: | |

| targetType: inline | |

| workingDirectory: $(System.DefaultWorkingDirectory) | |

| failOnStderr: true | |

| script: | | |

| chmod +x ./pipelines/batch_testing/$(batch_test_script) | |

| ./pipelines/batch_testing/$(batch_test_script) | |

| - task: Bash@3 | |

| displayName: Cleanup files after run | |

| inputs: | |

| targetType: inline | |

| workingDirectory: $(System.DefaultWorkingDirectory) | |

| failOnStderr: false | |

| condition: always() # this step will always run, even if the pipeline is canceled | |

| script: | | |

| rm -rf $(docker_dest_config_directory) | |

| rm -rf $(System.DefaultWorkingDirectory) |

Run Batch Jobs, Monitor Status, Give Pass/Fail

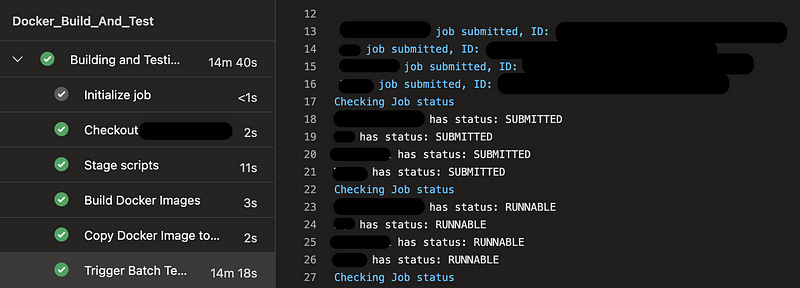

At this stage, we’ve built a docker container and pushed the image to a repository. In the manual process at this stage, the engineer would log into the AWS console, kick off a few batch jobs, and monitor their progress.

Therefore, our goal here is to do the same — kick off Batch jobs and monitor them — however, instead of a manual AWS console, we’ll use the AWS CLI for both steps, with a simple “while” loop to continue checking the status of jobs every n seconds — in this case, every 5 seconds.

First, some nice-looking banners to tell folks a job is running and why. We also set the date variable with a nicely formatted date string we’ll use to name the batch jobs shortly.

| echo "**************************" | |

| echo "##[section]Spinning up batch jobs to test the proposed changes" | |

| echo "**************************" | |

| # Set date, format YYYYMMDD, used to name batch jobs | |

| date=$(date '+%Y%m%d') | |

| echo "" |

Next, we need to submit a job. The AWS CLI has our back with aws batch submit-job and we fill in all the required variables to name it, and provide stuff like the job definitions, sizing overrides, and CLI flags to pass to our freshly built container. We pipe the output of that command into jq to pull out the ID of the job that’s returned by AWS to our terminal and store it as a variable like “job1” or “job2”.

Now that we have it, we echo it back to the screen for clarity’s sake. I also created a “name” field here so this team could more easily see which jobs succeed or fail later on in this script.

| # Submit Job 1 | |

| job1_name="job1" | |

| job1=$(aws batch submit-job --job-name BatchJob1Name_int_$date --job-definition BatchJob1JobDef:2 --job-queue arn:aws:batch:us-east-1:1234567890:job-queue/batch-job-queue-1 --region us-east-1 --container-overrides vcpus=4,memory=8192,command='["-a=foo","-b=bar"]' | jq -r '.jobId') | |

| echo "##[command]"$job1_name "job submitted, ID:" $job1 | |

| # Submit Job 2 | |

| job2_name="job2" | |

| job2=$(aws batch submit-job --job-name BatchJob2Name_int_$date --job-definition BatchJob2JobDef:2 --job-queue arn:aws:batch:us-east-1:1234567890:job-queue/batch-job-queue-1 --region us-east-1 --container-overrides vcpus=4,memory=8192,command='["-a=foo","-b=bar"]' | jq -r '.jobId') | |

| echo "##[command]"$job2_name "job submitted, ID:" $job2 |

I show 2 of these, but you could do n jobs. The most I’m doing is 4, but this could scale out indefinitely.

I set a couple of human-readable variables in our script of whether we’re still testing these jobs. We’ll modify these later as the job either succeeds or fails, and no longer needs to be tested.

| # Initialize tracking variables | |

| job1_still_checking="yes" | |

| job2_still_checking="yes" |

Now for our mega while loop. We start a loop, and on each pass we’ll check each job’s status, again passing the job status returned from AWS to jq so it can filter for our status result. If SUCCEEDED, we stop checking this job on each pass. If FAILED, we break out of the loop — any job’s failing means there’s no need to test further. We handle this response lower down in the next code section.

At the bottom of this section, we check if all jobs are still checking. This is needed since a single job succeeding shouldn’t close out our while loop unless all other jobs have also succeeded. So we check to make sure all jobs have finished checking. If they have, we exit the loop.

| while [ 0=0 ]; do | |

| echo "##[command]Checking Job status" | |

| if [ $job1_still_checking = "yes" ]; then | |

| job1_results=$(aws batch describe-jobs --jobs $job1 --region us-east-1 | jq -r '.jobs[].status') | |

| echo $job1_name "has status:" $job1_results | |

| case $job1_results in | |

| "SUCCEEDED") | |

| echo "##[section]"$job1_name "job succeeded" | |

| job1_still_checking="no" | |

| ;; | |

| "FAILED") | |

| echo "##[error]"$job1_name "job failed" | |

| job1_still_checking="no" | |

| break | |

| ;; | |

| esac | |

| fi | |

| if [ $job2_still_checking = "yes" ]; then | |

| job2_results=$(aws batch describe-jobs --jobs $job2 --region us-east-1 | jq -r '.jobs[].status') | |

| echo $job2_name "has status:" $job2_results | |

| case $job2_results in | |

| "SUCCEEDED") | |

| echo "##[section]"$job2_name "job succeeded" | |

| job2_still_checking="no" | |

| ;; | |

| "FAILED") | |

| echo "##[error]"$job2_name "job failed" | |

| job2_still_checking="no" | |

| break | |

| ;; | |

| esac | |

| fi | |

| # If any jobs still waiting, loop | |

| if [ $job1_still_checking = "no" -a $job2_still_checking = "no" ]; then | |

| echo "##[section]Done checking" | |

| break | |

| fi | |

| sleep 5 | |

| done |

Outside of our loop, our final check is to make sure all job results are succeeded. This is a final check to make sure our while loop exited in a happy state and jobs succeeded. If not, we echo out that something went wrong and folks are free to send another commit to the PR.

| # If all happy, return 0 exit code. Else return 1 and fail | |

| if [ $job1_results = "SUCCEEDED" ] && [ $job2_results = "SUCCEEDED" ]; then | |

| echo "##[section]All tests pass" | |

| exit 0 | |

| else | |

| echo "##[error]At least one test has failed. Either your code or our tests aren't valid." | |

| echo "##[error]Feel free to run the validations again" | |

| exit 1 | |

| fi |

Profit

Once this project was delivered, this single engineer, who kept their phone on during vacation and lunches to do this urgent, critical task, no longer had to. And that’s the real success — freedom to work on interesting things, instead of repeating boring, time-consuming stuff.

You can find the entire code-base here:

KyMidd/AzureDevOps_DockerAndBatch_Automation

Contribute to KyMidd/AzureDevOps_DockerAndBatch_Automation development by creating an account on GitHub.github.com

Good luck out there!

kyler