🔥Let’s Do DevOps: Making a GitHub Action Event Driven + New Repo Immediate Configuration + GitHub Apps + Python3 Lambda (Part 1)

This blog series focuses on presenting complex DevOps projects as simple and approachable via plain language and lots of pictures. You can…

This blog series focuses on presenting complex DevOps projects as simple and approachable via plain language and lots of pictures. You can do it!

Hey all!

That title is a mouthful! Over the past few months I’ve taken on a side project — building a “little script” that configures all the repos in our github Org — it sets all the permissions, builds all the branch policies, checks all the boxes we care about, etc.

That Little Script has been a constantly evolving project as I’ve used it as a vehicle to learn more about GitHub Actions. I’ve built an API token empty circuit breaker, sharded the workload over n builders, and wrote an overview of what the tool is doing and how it works.

The basics of this tool is we have lots of repos, and we want to keep them configured properly. To do that, we download a list of all the repos, then synchronously iterate over them across 2 builders in parallel. However, there has been a big problem — when a new repo is built, it isn’t configured until the next time the “GitHubCop” (the tongue in cheek name for the tool I built) runs, which is currently nightly. That could be a long time during which the repo is configured incorrectly, doesn’t connect to our Jenkins instance, doesn’t have the right permissions, etc.

What would be even better would be that when new repo is created, the GitHubCop script would immediately configure it. So that’s what I built. As part of it, I built:

A GitHub App — Lets us collect Org-wide actions, like “New Repo Created” and send a webhook to an arbitrary endpoint

AWS API Gateway — This lets us have a permanent URL to receive the webhook

AWS Lambda — This lets us process and verify the inbound webhook and extract the info we need, grab the secrets we need, and trigger the GitHubCop Action in our Org

AWS Secrets — To store the GitHub PAT (Personal Access Token) used to authenticate to GitHub to trigger the Action using a REST call

Updated the GitHubCop Action to receive an optional attribute — a repo name, that can be processed immediately, rather than processing all repos

This is an absurdly big write-up, so I broke it into two parts. In this one, we’ll talk GitHub App, API gateway, Lambda, Secrets, and maybe the changes needed for the Action to target a single repo. This is going to be HUGE regardless. Let’s get started. And if you just care about the code, scroll to the end — all code shared so you can build it yourself.

My Own GitHub App

In the Enterprise side of Github, Repos are organized with Organizations — basically collections of repos. There are strikingly few configurations that waterfall down from the “Org level” to the “Repo level”. Almost all settings are configured within a Repo only, individually. That’s one of the reasons I had to build the GitHubCop in the first place!

I initially thought I could set an on: for a GitHub Action in a repo within the Organization, and specify on: new-repo-creation or something. However, that’s not available. In fact, gathering that that action happened at all within your Org isn’t terribly easy — there is a log of all Actions within the Org, and I could potentially poll that log using some process, but that sounds like a lot of work, not to mention almost every poll of the log won’t see any repos — we create maybe a dozen repos a day, not hundreds. And I don’t want to wait until the next polling runs in order to configure the repo — I want it to happen immediately !

There is a GitHub-supported way to do this — a GitHub App. This sounded scary when I first read about it — do I need to pay GitHub in order to create an App for my Org? The answer is no — it’s totally free, just not immediately obvious. And GitHub Apps can trigger on different events in your Org, including new repos (but also LOTS of other stuff!). It’s perfect, let’s do it.

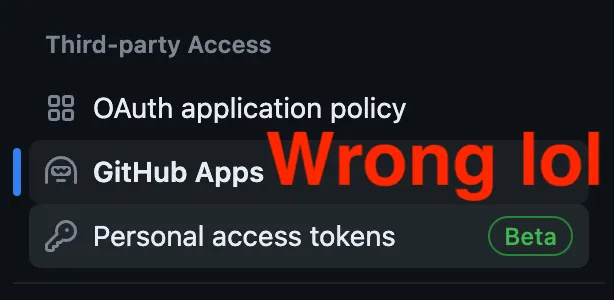

You need to be an Org admin in order to proceed, so if you aren’t, go grab one! You would intuitively think you should start from Org → Settings → Third-party Access → GitHub Apps, like how you see what GitHub Apps are registered in your Org right now. Weirdly, you’d be wrong.

There’s no way to create a GitHubApp from the GitHub App page, lol

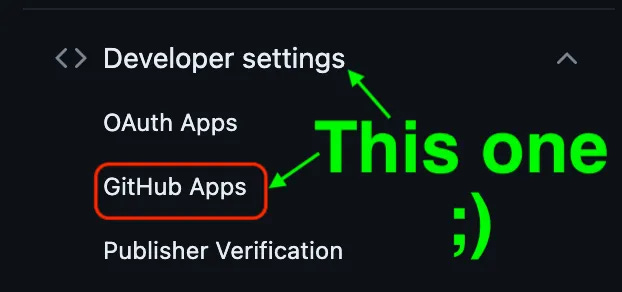

You need to start from Org → Settings → Developer settings → GitHub Apps → New

I’m going to skip over most of this, because it’s not totally relevant what you choose for the App’s picture or name. We need it to exist, but almost none of the configuration is done here. GitHub has a great write-up for how to create a new app, you can follow that:

Creating a GitHub App - GitHub Docs

You can create a GitHub App owned by your personal account, by an organization that you own, or by an organization that…docs.github.com

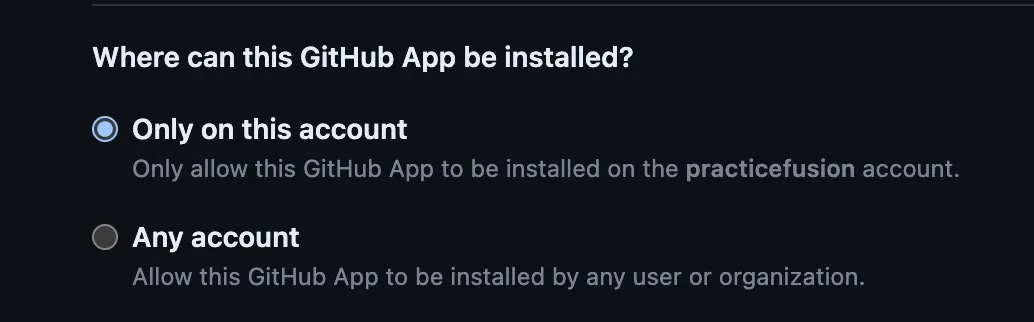

If you’re creating the App only for your corporate Org, select “Only on this account” at the bottom.

The permissions are also not terribly salient yet — we’re using this App to harvest Events in the Org — we’re not using it to DO anything after that. (In the future I’m going to do that, look for a future write-up to use the App’s own PAT, rather than a user’s PAT — you get way more API call tokens that way, but that’s beyond scope today).

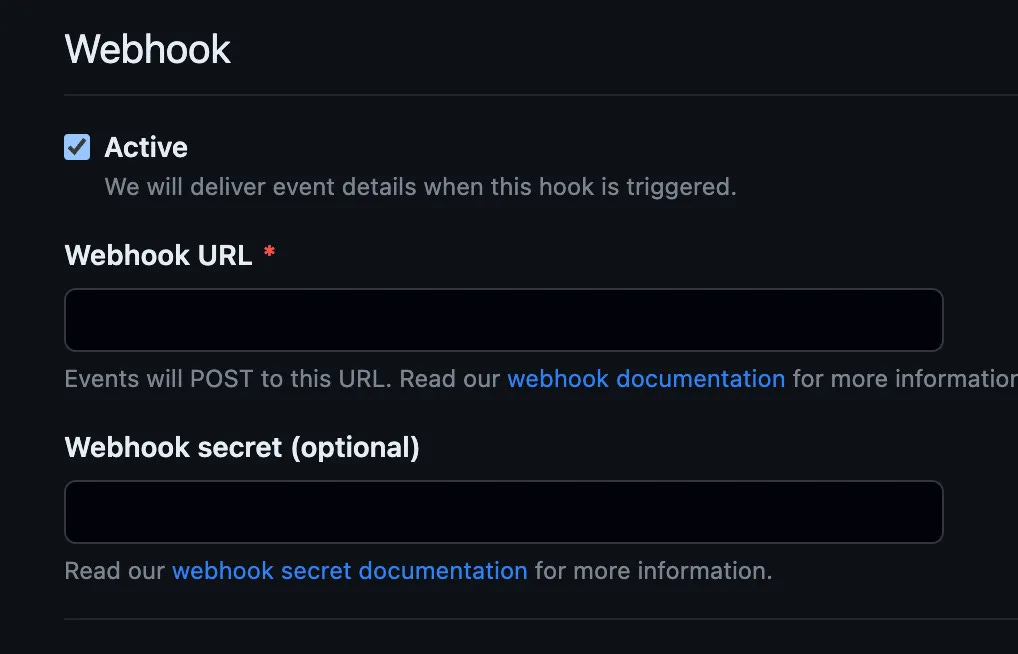

This part of the App is relevant for us — we want to generate webhooks (http POST notifications) when stuff happens. If you have the URL of the API gateway already, put it here, else you can set it as not active by unchecking the box — you can come back here later and update any of these settings.

Hit save! Yay, now we have a GitHub App installed in our Org! Hit Configure over on the right to go into the full settings menu for this App.

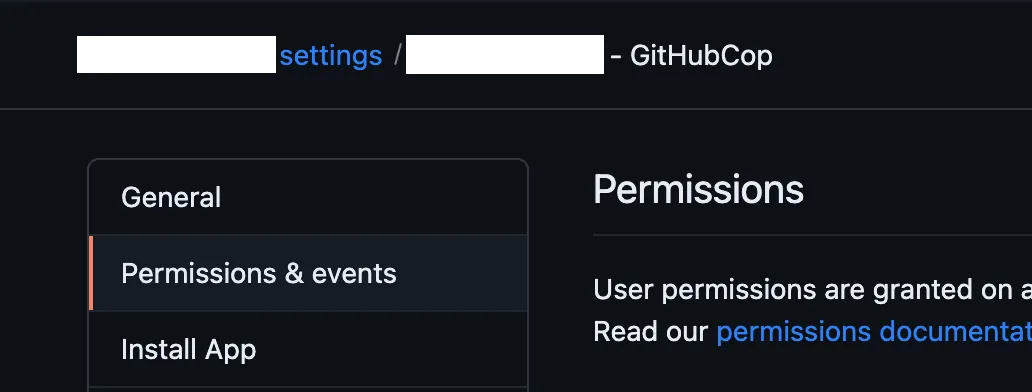

Over on the left, click on “Permissions & events”. These Events are the ones that will cause a webhook to be sent (full of tasty, tasty information about what just happened), to our API gateway and lambda.

Scroll down until you see Repository. Check that box. That’ll make sure that any time a Repository is created (or deleted, archived, etc.), a webhook will find its way to our lambda. Woot!

Okay, that’s our GitHub App. Let’s go build our Lambda.

API Gateway and Lambda

You could as easily build an Azure Logic App, but I’ve never built an AWS Lambda before, so it seemed like a fun project to see if I could figure out how.

Lambdas are code that is run when the Lambda is triggered. By default they timeout in 3 seconds, so you can see that they aren’t intended to be long-running processes. However, they are intended and built to run many iterations at the same time. Wanna call a Lambda hundreds of times a second, all day and all night? That’s what lambdas for.

Lambda IAM

As with most things in AWS, the first aspect we should think of is Security — what is this lambda permitted to do? And that means starting with IAM, the security service for all things AWS. First of all, we need an IAM role — that’s a bucket for permissions — we can even specify what’s able to use the role using an assume policy, so that’s what we do on line 7 — only permit the Lambda service to use this role.

And on line 14, we create the actual IAM role, and specify the assume role policy we just created, line 16.

| data "aws_iam_policy_document" "GitHubCopNewRepoTriggerRole_assume_role" { | |

| statement { | |

| effect = "Allow" | |

| principals { | |

| type = "Service" | |

| identifiers = ["lambda.amazonaws.com"] | |

| } | |

| actions = ["sts:AssumeRole"] | |

| } | |

| } | |

| resource "aws_iam_role" "GitHubCopNewRepoTriggerRole" { | |

| name = "GitHubCopNewRepoTriggerRole" | |

| assume_role_policy = data.aws_iam_policy_document.GitHubCopNewRepoTriggerRole_assume_role.json | |

| } |

Next we want our Lambda to be allowed to read some Secrets — that’s where we’ll store the GitHub PAT (security token we can use to tell GitHub to do stuff, like start the GitHubCop Action), and the GitHub Webhook Secret, the password that the body of the webhook is hashed with to add a layer of security. We’ll talk more about this when we talk about the python Lambda.

The first stanza of this Role Policy (basically an attribute of a role, rather than a standalone IAM Policy) is to give it the ability to describe and read secrets, lines 10–16, and the specific secret ARNs, line 17–20.

Then we grant our Lambda the ability to kms:decrypt a particular KMS key that one of our secrets is encrypted with. This is only required if a secret is encrypted with a CMK, or Customer Managed Key. If you use an AWS-Managed Key, and are accessing it from the same account, it’s permitted by default.

| resource "aws_iam_role_policy" "GitHubCopRepoTrigger_ReadSecrets" { | |

| name = "ReadSecret" | |

| role = aws_iam_role.GitHubCopNewRepoTriggerRole.id | |

| policy = jsonencode( | |

| { | |

| "Version" : "2012-10-17", | |

| "Statement" : [ | |

| { | |

| "Effect" : "Allow", | |

| "Action" : [ | |

| "secretsmanager:GetResourcePolicy", | |

| "secretsmanager:GetSecretValue", | |

| "secretsmanager:DescribeSecret", | |

| "secretsmanager:ListSecretVersionIds" | |

| ], | |

| "Resource" : [ | |

| var.github_pat_secret_arn, | |

| var.github_webhook_secret_arn | |

| ] | |

| }, | |

| { | |

| "Effect" : "Allow", | |

| "Action" : [ | |

| "kms:Decrypt" | |

| ], | |

| "Resource" : [ | |

| data.aws_secretsmanager_secret.github_pat_secret_kms_cmk_arn.kms_key_id | |

| ] | |

| } | |

| ] | |

| } | |

| ) | |

| } |

Next we specify some data sources — aws_caller_identity, which can lookup info about our authenticated AWS session, and aws_region, which can find… well, our AWS region.

Then we build another Role Policy — this one grants our Lambda the ability to write logs to Cloudwatch, which is critical since Lambda spins up and down within a matter of seconds — we’re not going to SSH to something to read the logs, they will already have been destroyed. We tell lambda it has the rights to create log groups (line 17) and then populate them with logs (line 23–24).

| # Build data source to find AWS account id | |

| data "aws_caller_identity" "current" {} | |

| # Get region | |

| data "aws_region" "current" {} | |

| resource "aws_iam_role_policy" "GitHubCopRepoTrigger_Cloudwatch" { | |

| name = "Cloudwatch" | |

| role = aws_iam_role.GitHubCopNewRepoTriggerRole.id | |

| policy = jsonencode( | |

| { | |

| "Version" : "2012-10-17", | |

| "Statement" : [ | |

| { | |

| "Effect" : "Allow", | |

| "Action" : "logs:CreateLogGroup", | |

| "Resource" : "arn:aws:logs:us-east-1:${data.aws_caller_identity.current.id}:*" | |

| }, | |

| { | |

| "Effect" : "Allow", | |

| "Action" : [ | |

| "logs:CreateLogStream", | |

| "logs:PutLogEvents" | |

| ], | |

| "Resource" : [ | |

| "arn:aws:logs:${data.aws_region.current.name}:${data.aws_caller_identity.current.id}:log-group:/aws/lambda/GitHubCopRepoTrigger:*" | |

| ] | |

| } | |

| ] | |

| } | |

| ) | |

| } |

API Gateway

Lambdas are able to be exposed directly to the internet via a configured called a Lambda Function URL. Which is all I thought I needed until I made an update to the python lambda — then the URL changed. That’s weird, because the AWS docs for Function URLs say that’s not supposed to happen. Weird. So I decided to do it another way — an API Gateway.

API Gateways are capable of all sorts of awesome behavior — they can do SSL offloading, and layer7 routing of URLs — very load-balancer-esque, but we don’t care about any of that. We only want it to be a transparent proxy with a permanent URL, so we can send our webhooks to it.

To be totally honest, this is the part I understand the least. Hopefully my explanations are accurate, but if not please call them out in comments and I’ll correct!

First we build a REST API resource, and then assign it to an API Gateway resource. This is the part that has a public URL and can receive traffic. On line 9, we tell it to act in proxy mode.

| resource "aws_api_gateway_rest_api" "github_cop_api_gateway" { | |

| name = "GitHubCopApiGateway" | |

| description = "GitHubCop API Gateway" | |

| } | |

| resource "aws_api_gateway_resource" "github_cop_api_gateway_proxy" { | |

| rest_api_id = aws_api_gateway_rest_api.github_cop_api_gateway.id | |

| parent_id = aws_api_gateway_rest_api.github_cop_api_gateway.root_resource_id | |

| path_part = "{proxy+}" | |

| } |

These two resources configure the API Gateway on which HTTP methods to receive, and what type of authorization to enforce. Since we want a transparent proxy, accept all, don’t check for any authorization.

| resource "aws_api_gateway_method" "github_cop_api_gateway_method" { | |

| rest_api_id = aws_api_gateway_rest_api.github_cop_api_gateway.id | |

| resource_id = aws_api_gateway_resource.github_cop_api_gateway_proxy.id | |

| http_method = "ANY" | |

| authorization = "NONE" | |

| } | |

| resource "aws_api_gateway_method" "github_cop_api_gateway_proxy_root" { | |

| rest_api_id = aws_api_gateway_rest_api.github_cop_api_gateway.id | |

| resource_id = aws_api_gateway_rest_api.github_cop_api_gateway.root_resource_id | |

| http_method = "ANY" | |

| authorization = "NONE" | |

| } |

Then we configure our Lambda integration — we tell the API Gateway that anything it receives it should POST over to Lambda — that’s the only HTTP method supported by this integration type anyway, so that’s perfect. The AWS_PROXY part tells the API Gateway to forward on the HTTP headers to the Lambda — that’s necessary, since we’ll check the payload hash on our side and see if it matches the header value GitHub sends us as part of the webhook.

| resource "aws_api_gateway_integration" "github_cop_api_gateway_lambda_integration" { | |

| rest_api_id = aws_api_gateway_rest_api.github_cop_api_gateway.id | |

| resource_id = aws_api_gateway_method.github_cop_api_gateway_method.resource_id | |

| http_method = aws_api_gateway_method.github_cop_api_gateway_method.http_method | |

| integration_http_method = "POST" | |

| type = "AWS_PROXY" | |

| uri = aws_lambda_function.GitHubCop_New_Repo_Trigger.invoke_arn | |

| } | |

| resource "aws_api_gateway_integration" "github_cop_api_gateway_lambda_root" { | |

| rest_api_id = aws_api_gateway_rest_api.github_cop_api_gateway.id | |

| resource_id = aws_api_gateway_method.github_cop_api_gateway_proxy_root.resource_id | |

| http_method = aws_api_gateway_method.github_cop_api_gateway_proxy_root.http_method | |

| integration_http_method = "POST" | |

| type = "AWS_PROXY" | |

| uri = aws_lambda_function.GitHubCop_New_Repo_Trigger.invoke_arn | |

| } |

Then we tell Terraform to deploy these configurations to the “cop” stage. That means our API gateway’s URL will be (generated-url).com/cop, which is pretty cool.

Line 9 establishes a permission set to tie the Lambda to the API gateway. It grants the API Gateway the ability to Invoke the Lambda, meaning it doesn’t just send the data over — it actually kicks the Lambda on and then hands off the payload for it to run. Pretty awesome, really.

| resource "aws_api_gateway_deployment" "github_cop_api_gateway_deployment" { | |

| depends_on = [ | |

| aws_api_gateway_integration.github_cop_api_gateway_lambda_integration, | |

| aws_api_gateway_integration.github_cop_api_gateway_lambda_root, | |

| ] | |

| rest_api_id = aws_api_gateway_rest_api.github_cop_api_gateway.id | |

| stage_name = "cop" | |

| } | |

| resource "aws_lambda_permission" "lambda_permission" { | |

| statement_id = "APIGatewayToGitHubCopNewRepoTriggerLambda" | |

| action = "lambda:InvokeFunction" | |

| function_name = "GitHubCopRepoTrigger" | |

| principal = "apigateway.amazonaws.com" | |

| source_arn = "${aws_api_gateway_rest_api.github_cop_api_gateway.execution_arn}/*" | |

| } |

Lambda Configuration

I don’t think I’ll get to the Lambda code until part 2, but let’s go over the Lambda terraform configuration.

The Lambda exists as a file in a sub-folder for where our Terraform is — you can see the exact path and name on line 3. Make sure to use ${path.module} if you’re calling a module, that’s zipping up this file.

This resource type creates a zip file of the file, and (when terraform is run), automatically hash the file to see if it’s changed. If it has, it’ll create a new zip file.

| data "archive_file" "githubcop_repo_trigger_lambda" { | |

| type = "zip" | |

| source_file = "${path.module}/GitHubCopTriggerLambdaSource/GitHubCopRepoTrigger.py" | |

| output_path = "${path.module}/GitHubCopRepoTrigger.zip" | |

| } |

Then we actually build our lambda. There’s a ton of complexity packed into this little snippet. First, on line 2, we say to read this zip file that was built by the archive_file resource we just configured. We set a name (line 3), and assign the IAM role we built above (line 4).

The handler deserves some attention. It’s not very well explained in the docs what a handler is. A Handler is what the Lambda resource will do when invoked. It’s always foo.bar, with a period in the middle. The first word is the name of the file it’ll look in. The second word (after the period) is the function in that file it’ll execute. AWS recommends calling the function lambda_handler, and who am I to argue. So line 5 tells the lambda resource to look at a file called GitHubCopRepoTrigger (notably skipping the .py at the end of the filename), and execute the lambda_handler function.

Line 6 was a gotcha I ran into pretty quickly. Lambda’s default timeout is 3 seconds, which is really fast! I’m fetching a few secrets and reading some json data, which I thought would be super fast, but takes longer than 3 seconds, which makes the launch timeout. I bumped it to 60 seconds and my code started working. You can update the timeout to up to 15 minutes.

Line 8 are the layers that will be baked into this lambda’s launch. Those layers contain tools and configurations that will be present when your Lambda launches. AWS maintains a bunch of useful ones, like the one I’m calling on line 10, that contains all the tools you need to access Secrets Manager. Notably, I tried to find this layer’s ARN using a data resource, and totally failed. I ended up hard-coding.

And on line 12, we set a source_code_hash. This is a trigger for updating the Lambda resource with new code. We take the sha-256 hash of the zip file created by the data resource and store it. If the next time terraform runs that hash doesn’t match, it means the code has been updated, and a new Lambda version should be deployed.

Finally, on line 13 (see what I mean about complexity of this one resource?!), we set the runtime for our Lambda. I wrote this for Python3, and 3.7 is the newest version to contain the requests library that I use to make http calls to GitHub to make our REST calls.

| resource "aws_lambda_function" "GitHubCop_New_Repo_Trigger" { | |

| filename = "${path.module}/GitHubCopRepoTrigger.zip" | |

| function_name = "GitHubCopRepoTrigger" | |

| role = aws_iam_role.Ue1TiGitHubCopNewRepoTriggerRole.arn | |

| handler = "GitHubCopRepoTrigger.lambda_handler" | |

| timeout = 60 | |

| # Layers are packaged code for lambda | |

| layers = [ | |

| # This layer permits us to ingest secrets from Secrets Manager | |

| "arn:aws:lambda:us-east-1:1234567890:layer:AWS-Parameters-and-Secrets-Lambda-Extension:4" | |

| ] | |

| source_code_hash = data.archive_file.githubcop_repo_trigger_lambda.output_base64sha256 | |

| runtime = "python3.7" | |

| } |

Summary and Next Time

Okay, we’re not even to the actual Python3 code of the Lambda, or how we updated the GitHubCop Action to take a REST argument of a single repo for processing, and we’re already at 2.5k words, so let’s break this into two parts.

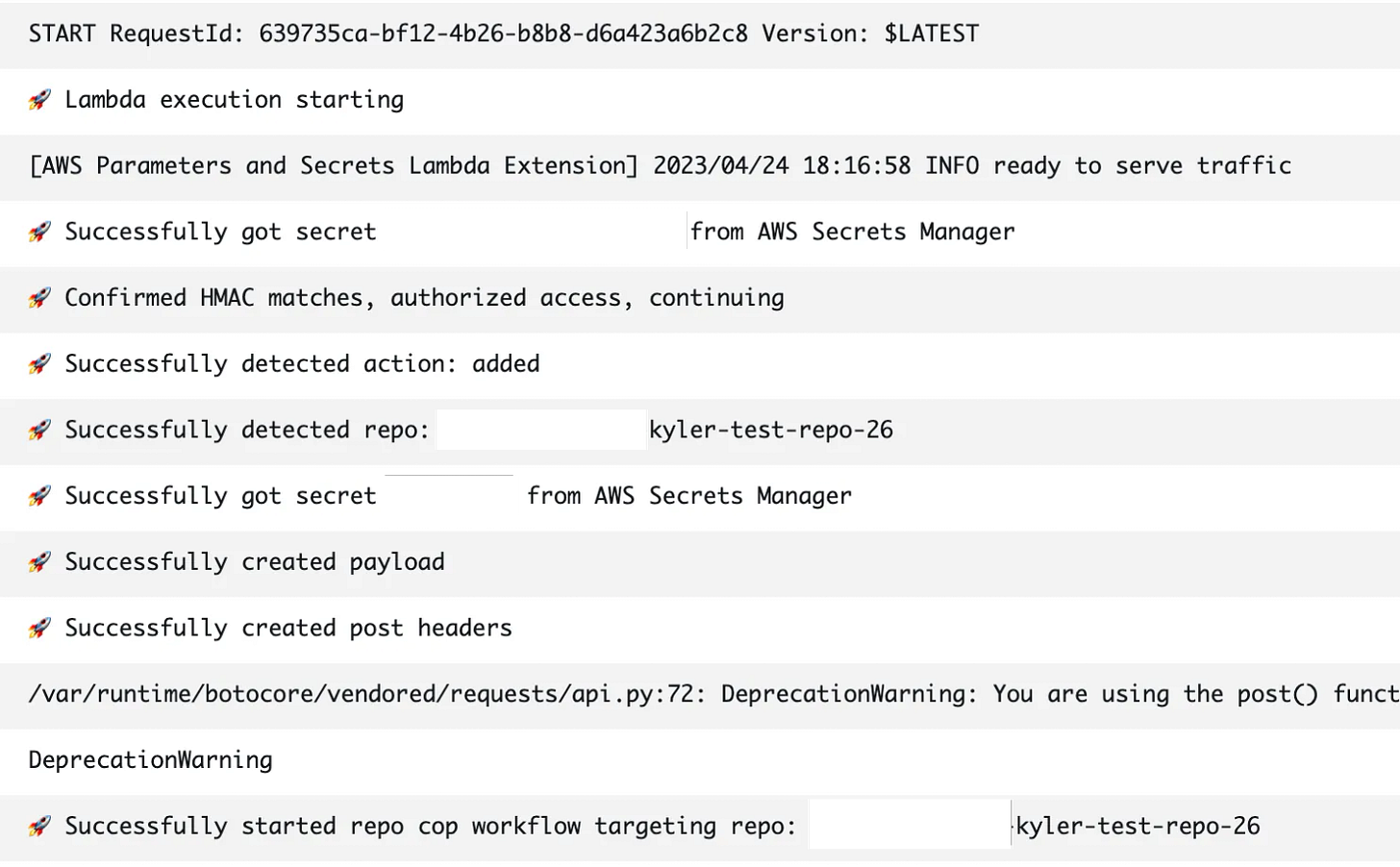

During Part 1, we went over the theory around what we’re doing — we created a GitHub App, and set it to send a password seeded hash’d webhook to our API gateway. We configured that API gateway to operate in proxy+ mode, and granted it the ability to trigger our Lambda. Then we built our Lambda with mysterious python3 code that we haven’t gone over yet.

For Part 2 (you can find it here), we’re talking code all day. We’ll go over the python3 code I wrote to validate the http header sha-256 hash from github, isolate the “event” that triggered the webhook, grab a few secrets, and trigger a GitHub Action using a REST call using the requests library. We’ll also go over the changes necessary to update an Action to take a REST input, and how I configured my Action to operate on only a single repo when called this way (but all repos if we want).

Here’s all the code for both so you can do it too!

GitHub - KyMidd/GitHubCopLambda-EventDriven

You can't perform that action at this time. You signed in with another tab or window. You signed out in another tab or…github.com

Good luck out there.

Kyler