🔥Let’s Do DevOps: Terraform AWS Ec2 Module (Required)

Hey all!

Hey all!

Terraform has this great concept of “modules” which have a ton of uses. One of the most common is to have a resource-specific module that builds a resource with the required security and operational settings your org has standardized on. That lets your module receive just the bare minimum of values (making life easier for developers), and still building things appropriately and securely.

Terraform’s behavior with most resources and calls works well in this way, but interestingly, AWS EC2 is not in that list. There is a significant bug with how Terraform (and the AWS API) handles building ec2 modules. Here’s the GitHub bug report (opened fully 3.5 years ago at time of this blog’s publication):

Inline EBS block device modification on AWS instance not working · Issue #2709 ·…

You can't perform that action at this time. You signed in with another tab or window. You signed out in another tab or…github.com

The problem arises when a user attempts to update a non-root EBS volume, which is the way AWS manages additional disks for your hosts. Rather than supporting an uptime change (like the AWS console does), Terraform requires you to destroy the host and rebuild from scratch.

WHAT???

Given that instances generally store tons of data and aren’t expected to be rebuilt frequently (certainly not by a change this is seamless and easy in the web console), this comes as a pretty big surprise — and likely a very unhappy one, for a terraform consumer that accidentally destroys a host when they just wanted to give it some more storage for something.

Why The Heck?

This problem arises due to how Terraform manages resources, which is to say — monolithically, vs how the AWS provider and APIs manage resources, which is macro-like.

Terraform has had some issues around these same types of situations. For complex resources, like EKS, AKS, batch, CloudWatch, etc., when you create a single resource in the console, you’re actually triggering a macro that builds lots of resources. Terraform assumes that a “resource” block maps to a single resource only, not to a whole bunch of resources that are also built, and has trouble keeping track.

In this case, the AWS Provider developers found a way to manage the non-root EBS volumes, but the behavior is (and has been for years), a bit wonky.

First, changes in the live env of EBS size aren’t detected by Terraform. For reasons I don’t understand, Terraform doesn’t check in on the attached EBS volumes’ size and true it up when the config doesn’t match.

Second, when the Terraform config is updated to resize an EBS volume (only increase, decrease not supported via API for some reason), the provider says that the only way to increase the disk size (an entirely separate resource from the instance) is to destroy the instance, rebuild it, then rebuild the EBS with the larger size, and attach it.

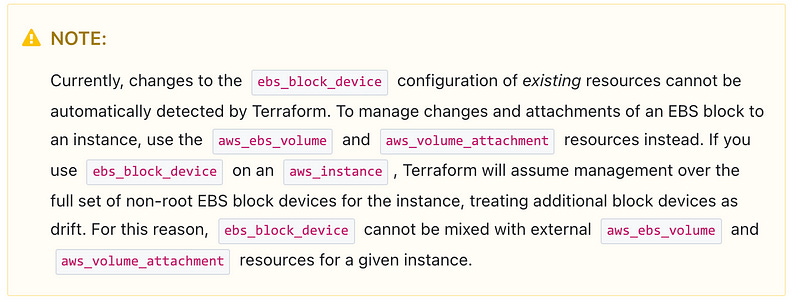

There is a warning on the aws_instance resource page which sort of explains the problem, but isn’t clear that using this attribute will result in data loss.

How to Avoid

The only real way to avoid this is to use separate resources to build additional EBS volumes and then attach them. But that requires learning about the problem, then either having all your developers understand the problem and work around it, or building a module that can handle the problem.

Or you could copy my work — I build an ec2 instance module that receives config in a very similar way to the ec2 instance resource page, but rather than destroying your instance when you change an EBS size, it manages separate resources within the module.

| ebs_block_device = { | |

| "/dev/sdl" = { | |

| volume_type = "standard" | |

| volume_size = 50 | |

| encrypted = true | |

| kms_key_id = var.kms_key_arn | |

| }, | |

| "xvdf" = { | |

| volume_type = "gp2" | |

| volume_size = 200 | |

| encrypted = true | |

| kms_key_id = var.kms_key_arn | |

| } | |

| } |

It’s saved me some heartaches. You can find the code here:

KyMidd/Terraform-AWS-EC2-Instance-Module

Contribute to KyMidd/Terraform-AWS-EC2-Instance-Module development by creating an account on GitHub.github.com

However, you likely already built your resources, right? Well then, it’s time to use terraform commands to remove it from management and then add the resources again properly so they’re managed correctly.

First, remove the resource from terraform management:

terraform state rm aws_instance.ServerNameNow the resource isn’t managed at all by terraform. However, that’s not what we want either. Now let’s bring the resource back into management. In case your browser plays with the quotes here, they’re single straight quotes, not “smart” quotes.

terraform import 'module.ServerName.aws_instance.aws_instance[0]' i-1234567890This brings your host back into AWS management (including the root disk), but you’ll also need to bring each non-root EBS disk into management individually. The import commands look like this:

terraform import 'module.ServerName.aws_ebs_volume.ebs_block_device_volumes["/dev/sdl"]' vol-1234567890

terraform import 'module.ServerName.aws_ebs_volume.ebs_block_device_volumes["xvdf"]' vol-1234567890Once done, test out updating the EBS disks. Rather than destructively rebuilding your instance, it now simply updates the EBS disk size. A much nicer, and much more expected, outcome.

Good luck out there!

kyler