Tech Talk: Migrating 1.5k Repos to GitHub — Mistakes, Tools, and Lessons Learned

I gave this talk October 16, 2023, at CloudSecNext 2023. The powerpoint deck (with all links to tools) is here.

I gave this talk October 16, 2023, at CloudSecNext 2023. The powerpoint deck (with all links to tools) is here.

Recording

https://www.youtube.com/live/UrYesg5dQ9k?t=24079s

Transcript

The text for the talk follows here:

Intro

Hey all!

My name is Kyler Middleton, and I’m here at CloudSecNext for the second time! Thanks to SANS for thinking I did well enough at this year to invite me back!

Consulting Project Types

I used to do consulting work, and I noticed that I was asked to help on projects of two varieties. The projects were all different scopes and verticals — it wasn’t the technology stack that caused folks to require outside help — it was the people.

For the good projects, folks were self-aware that they didn’t have the technological skills to do the project, and they wanted someone there to help them get stuff done.

The other kind of project was the difficult path — these types of places had a culture that wasn’t welcome to change, and they required someone outside their culture to come in and be the bad guy to make all these changes.s

And you knew, what you recognized these types of projects, that they’d fight you the entire time, because as much as leadership and the business knew that you were there to help, the rank and file were unhappy you were there, and often felt threatened by you changing the environment they were comfortable in.

Thankfully I’m here today to talk about the first type of project — where I’m consulting on a project with willing folks, who are aware that there are technical gaps they’re unable to fill, and project requirements they don’t have time to fill.

However, as with any project that touches how people work, there’s the implicit threat — if you break stuff, and make the lives of the people doing the work and running the business, you can easily slip into the villain role, and that project will become absolutely impossible to complete successfully.

Let’s Move The Cheese

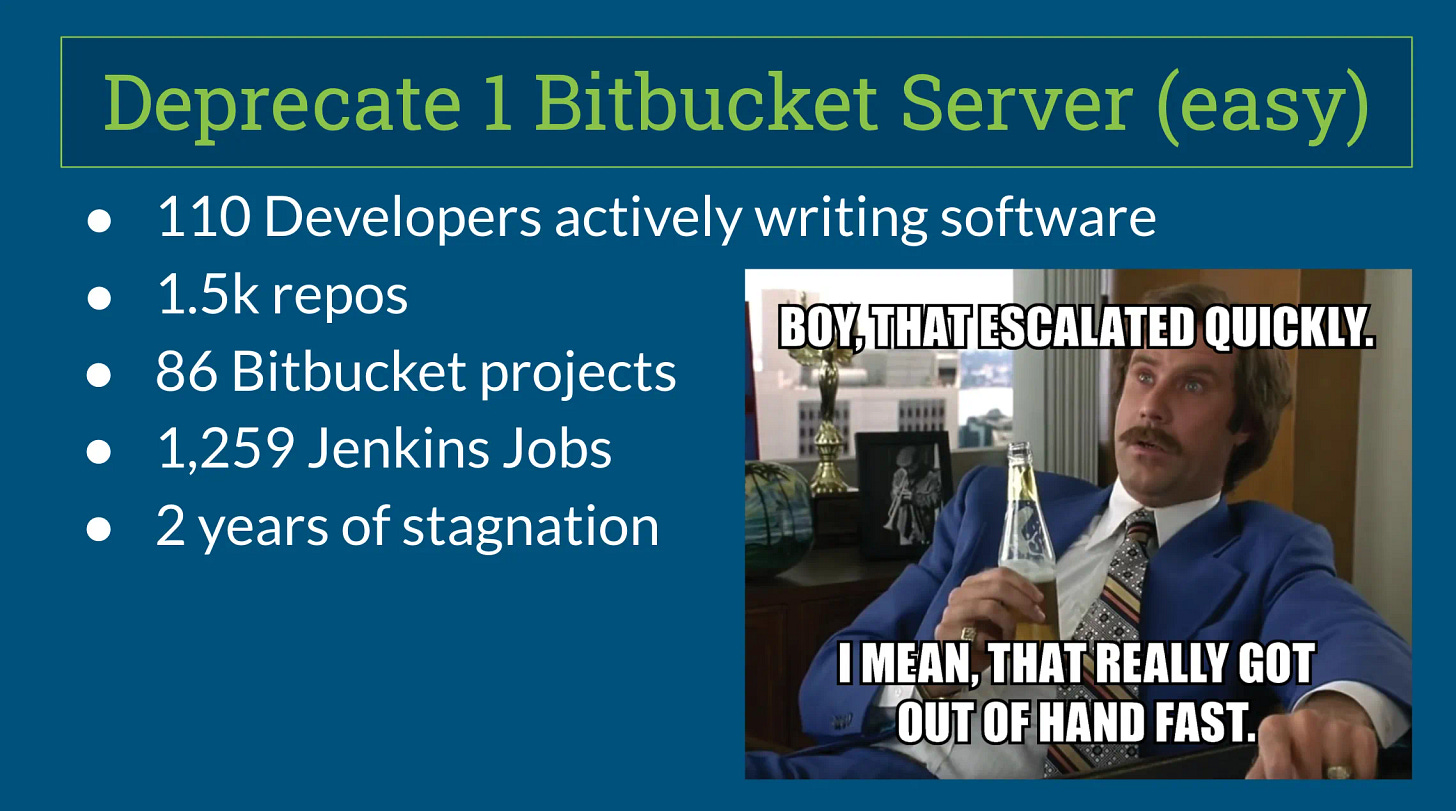

The project specifically was to deprecate a BitBucket server. I mean, that’s easy, right? Well, there was 1,500 code repositories over 86 Bitbucket projects that are executed by 1,259 Jenkins jobs across two Jenkins clusters served by several dozen builders.

And that’s just the technical ask. Here’s the business ask — Make sure that all 110 engineers that commit to the codebase, and troubleshoot issues, and actually bring in the real money that pays my paycheck, are kept updated on how things work as we partially migrate, and are able to keep working.

And here’s the keep-your-job ask — Keep the plane flying while you’re doing surgery on it. We would be very unhappy to go down due to shipping the wrong version of a product, or breaking a deploy script so it pushes the wrong version of code to production.

And this is healthcare — we are a heavily regulated industry in the US — if something I do drives a data leak, we could quite literally be facing a lawsuit and stiff penalties.

Oh also, this project has been assigned to folks for literally 2 years, and no significant progress has been made.

So. Good luck me!

Why Does Where Code is Stored Matter?

This is a great question to start understanding the scope of this project. Where the code is stored matters because of what’s integrated with your code storage, which is to say, everything.

Within the code storage, pull requests build and validate the code you’ve built won’t cause issues. Merging to your deploy branches shouldn’t be permitted by any failing code.

When code is merged to a release branch, it should be “deployed”. What this means is different for almost every project. Right to prod? Sometimes! To pre-prod for smoke testing? Sometimes!

All of these integrations and automations are orchestrated by the Operations and DevOps teams. At any significantly sized company, you likely have thousands of concurrent work streams active all the time!! That is a huge amount of concurrency and human-hours to keep everything distinctly not-on-fire.

So all we need to understand is everything? Okay, awesome.

Break it down

The Martian is one of my favorite movies. In it, Matt Damon (spoilers) accidentally gets left on Mars alone, and is faced with the impossible feat of staying alive for years, despite the inhospitable environment, and having only enough food for a few months, and machines intended to last 60 days at most.

There’s this great quote, that I’ll read to you, captive audience.

“At some point, everything’s gonna go south and you’re going to say, this is it. This is how I end. Now, you can either accept that, or you can get to work. That’s all it is. You just begin. You do the math. You solve one problem and you solve the next one and then the next. And if you solve enough problems, you get to come home.”

Now, we’re not in a life and death situation, but the mentality is the same. Big projects like this can’t all fit in your head at the same time. If you try to do that, you get overwhelmed and your gut will tell you to just give up.

The only way to do impossibly large projects is to break them down into small component problems, and solve those, one at a time.

Find an issue, solve it. Next problem, solve it.

Let’s solve some problems.

Problem 1: GitHub Pull Requests should Build and Validate in (on-prem) Jenkins

We elected not to move from Jenkins to GitHub Actions as part of this project — any time you can claw back scope on a massive project, do it! You can always do that as phase 2. However, that means we have a new problem — when you open a pull request on a GitHub-based repo, we now need SOMETHING to happen where Jenkins learns about this new activity, builds it, and we somehow also surface to the Pull Request the results of the build.

GitHub lets you configure webhooks on events in your repos, where we could notify some amazing looking extensions for GitHub built by the Jenkins open-source community that something has happened. However, our internal Jenkins, for one reason or another, is years older than the oldest supported version of Jenkins for any of the tools I could find. I even reached out to one of the authors to see what I could do to make it work, and they laughed and said to update our Jenkins.

Given that I’m afraid to touch that 8 year old Jenkins box, I decided to write a tool. Actually a bunch of tools to work in tandem.

Also, our Jenkins server is internal to our network, and being older, we didn’t want to expose it to the internet. So how would GitHub talk to it? A senior developer offered a great idea — just have an internal builder talk directly to Jenkins! So that’s what we did, we use an internal container-based builder pool to talk to Jenkins. Firewall, bypassed.

Tool: Notify Jenkins of any GitHub Commit (commitNotify)

https://github.com/KyMidd/ActionJenkinsCommitNotification

First, when something happens in GitHub, we need Jenkins to know about it. Jenkins has an unusual model of finding out what has happened — it doesn’t regularly poll your source control for updates, it instead waits for a notice API call called a commitNotify message. When it receives that message, it polls the source code to see if there are any commits to build.

If it finds any, it builds those commits and stores the status of the builds. That’s all Jenkins internal logic that I thankfully didn’t have to mess with — we just had to let Jenkins know. Done.

But notice, it doesn’t like, go tell GitHub what was done. How is GitHub going to know whether the build succeeded or failed?

Let’s build another tool!

Tool: GitHub Pull Requests Should Track Jenkins Build

https://github.com/practicefusion/ActionPRValidate_AnyJobRun

But then how do we surface the results of the build? Lots of times folks will commit software that doesn’t work — that’s the beauty of CI/CDs, they’ll tell you if it doesn’t work!

So I wrote another GitHub Action. This Action is triggered within the context of a Pull Request commit. When the Action is spun up, it’s aware of the hash of the commit that triggered it, so we start polling Jenkins’ job API to see if any jobs with the exact same commit have run, and get their status.

First of all, we send a commitNotification (just like the last tool does), and in the API response from Jenkins we can see which jobs were triggered. If we find the right job name was triggered, we start polling the Jenkins job queue for that job name. On each poll, we look at the entire history of jobs retained (usually a few dozen), and filter based on branch name and commit hash, both things Jenkins stores for queued jobs. If we don’t find a match, we repeat.

When we find a match, we know that JobID is the job we want to watch! We poll Jenkins’ queued job list with that ID until the job starts — when that happens, we get a job URL.

We keep polling that Job URL until Job Status has populated, which means the job has finished. We check to see if it’s a “happy” status. If yes, we exit 0 so our GitHub Action sees a successful result. If Jenkins has a “sad” status, then we exit 1 to tell the GitHub Action Jenkins didn’t build properly.

This tool took a lot of work! There was a lot of bugs to squash.

For instance, GitHub Actions have the concept of “run again”. In GitHub, that means “build this exact same commit on this branch again”. Jenkins also has the concept of “run again”, but it means something entirely different — in Jenkins-land, it means to build the newest commit on any branch. Because of this totally different way of viewing the concept of “re-run”, I disabled the re-run functionality. I’d rather it not work than provide bad information to the team. I hope to fix this one day, but we might migrate all our builds to GitHub Actions before that day comes.

Weird Thing

I’m not sure why this is, but when you’re extracting the commit hash your Action is run against, it’s populated. However, it’s wrong. This totally stymied me for a while. What the heck? Where did that extra commit come from?

I never figured that out. If you know, please let me know!

My hacky solution is to clone the git tree down and grab the second most recent commit. That happens to be correct, and that’s the one we poll Jenkins for.

With that populated, we make sure that the commit hash GitHub is tracking and the commit hash Jenkins is building actually match, and we’re providing accurate information to our Pull Request checks.

Speaking of commits…

Problem 2: Compliance Says Do It

There aren’t many places in a DevOps org where a single business unit can say “this is the way it is,” and folks just listen. Compliance is one of those places. You can argue that you shouldn’t NEED to satisfy a particular law with compliance all you want, and their answer is going to be, “Oh, you poor baby. Do it anyway.”

One of those regulations is that absolutely every commit (which is techy speak for a group of changes to a codebase) should contain a reference to a valid ticket. Those tickets in our ticketing system are required to have security and management approvals for code changes, which gives us great audit-ability of who approved changes, and why they were implemented.

The human part is beyond my scope, but requiring that every commit has a valid ticket number is not.

Bitbucket has this great functionality they call “pre-commit hooks” which means that before a commit is accepted by the server, it is read and policies can be set. If the commit doesn’t meet those requirements, it can be rejected, which makes life easy for developers. It even prints a cute little teddy bear to cut your sadness. Truly, a modern solution.

GitHub has pre-commit hooks, but only for their on-prem server solution. For their public, web-based solution, they don’t support it. I asked our TAM why and he said they’d immediately be DDoS’d off the web if they turned it on, and I’m not sure if that’s exactly true, but regardless, time for a new tool.

Tool: Feed the YaCC

Marketplace: https://github.com/marketplace/actions/check-commits-for-jira

In our Bitbucket server, we used an extension called YaCC, which stood for Yet Another Commit Checker. Due to the tight integration between Jira and BitBucket (both products of the company Atlassian), it required almost no configuration.

Not so when I’m building from scratch.

Given that I can’t do pre-commit checks, I decided to write post-commit checks as a Pull Request Action. It finds the commit hash that we branches our pull request off of to build a list of commits that exist in our PR branch.

Then we iterate over each one, and pull out the commit message. We have a list of non-compliant messages that are nevertheless accepted, like those generated automatically by GitHub when reverting commits, or merge conflict true-ups, or other unavoidable non-compliant commits. If it meets that static list of exceptions, it’s permitted.

If it doesn’t meet that list, we use regex to retrieve the Jira ticket at the beginning of the commit message. Each extracted Jira ticket number is checked against our ticketing system to make sure it exists.

If any commit doesn’t contain a commit message, or references a ticket that doesn’t exist, that PR is blocked from merging. Developers are expected to squash their branch back into shape or abandon it.

This isn’t nearly as seamless as a pre-commit hook, but it works. Good enough for now!

Problem: GitHub Repos are Islands

Okay, I have my repo-specific functionality sorted out! At least the validation and PR build and Jenkins integration stuff. I initially built the Actions in every repo, but that quickly got out of hand. I can’t be doing 1500 PRs every time I have an update to the code in any of those Actions.

In Bitbucket, there are great hierarchical management tools. For instance, if you want every single repo to trigger a particular Jenkins Actions, and require it to pass before the PR will merge, you can from a central, single place. Or you can target policies like this at a “Project”, which is a grouping of repos.

GitHub Orgs don’t have that concept — every Repo is an island, and configuration and policies are almost entirely stand-alone. GitHub is working to fix this, but the state of the art today is that Repos largely are configured one by one.

Which makes the problem of “put these Actions into every repo” a significantly more annoying issue.

The first step was to write Actions Yaml files that call my centralized actions. That way I only need to solve this problem once (or maybe even a few times, as I fine-tune action triggers and other local-to-repo stuff). However, most of the iteration on these Actions will be on the Action source themselves, and I only need to update that one repo, rather than every other single one.

To get to that point, however, we need to deploy these Actions everywhere.

Tool: Deploy Actions to Every Repo

https://medium.com/@kymidd/lets-do-devops-update-files-in-hundreds-of-github-repos-5262dfe5f529

Script to clone repo, update it (sed), branch, commit, push, PR, force-merge with admin rights

Okay, the problem set is to update absolutely every repo in the entire GitHub organization to have the version of files we have created in a template repo. These local files represent how every repo’s version of these files should look.

If the files are new, we should create them. If they’re not new, we should over-write the versions there. If there are no changes, we should do nothing — this is called idempotence. That means that if our run is interrupted for some reason , it won’t cause an issue to run it again, and it’s a hallmark of software that works well enough for an enterprise.

If you were to do this for a single repo, you’d clone the repo down to your computer, copy and paste the template files to the correct location of the repo copy on your computer, then do a branch, commit, and push to remote. Then you’d go get the PR merged.

GitHub helpfully lets us force-merge and bypass any branch protection rules (as long as the user account we’re using has admin rights, and the branch protection permits it).

So I basically just did that — I used the github API to get a list of all the repos in the entire Org, then I cloned each one in series, over-write copied any template Action files I need into the repo, then did a branch and commit. I initially was going to write a complex hash comparer function to see if the files were updated, and then I realized that doh, that’s exactly git’s job.

So I do a git add with verbose mode turned on (did you know git add had a verbose mode? I didn’t before this project) and it prints out if there is a change to any file. If yes, I set a canary variable that we need to push and merge the branch.

If not, there’s no changes — maybe the repo has already been updated. We just delete the repo and move on to the next repo.

This obviously isn’t a very elegant way to do this — I think there’s probably a way to remotely create all these files instead of requiring to clone every single repo. But barring a few special cases, most of our 1500 repos aren’t significantly large, so it only took about an hour to clone every single one and get it updated.

Put this software in the “works well enough” category, and we move on.

Now, we talked about repo-specific branch protection rules. How in the world are we going to deploy them to every repo? Let’s write a new tool.

Tool: Call the Cops!

https://github.com/KyMidd/GitHubCop

The file deployer stages all the Actions, CODEOWNERS files, and any other file we need to modify in the repo. Woot!

However, what about all the repo settings? Again, the “island”-nature of repos in a GitHub Org shows its head — even though we have branch policies that should apply to all, or almost all repos, we can’t configure them at the Org level — we are required to configure them per-branch, per-repo, on every. single. repo.

Early on when establishing this problem-set, I decided I wasn’t going to attempt any part of this manually. If we want to change absolutely any part of these branch protections or other settings, it would take either doing something 1500 times, or a bespoke tool each time to update.

And that’s pretty much what I started doing — building separate tools that I’d run in sequence that would, for instance, go into every repo, and set the “delete branch after merging PR” check-box. And a separate tool for the “Enable Actions from Enterprise” check-box. And eventually I had a whole folder of scripts that I’d have to run in order, and decided to combine them into a single Action I called the GitHub Cop.

I had some developers who weren’t thrilled with the Police association, so for some Orgs I’ve deployed this in, we called it Inspector GitHub, a play on Inspector Gadget.

Go go gadget cloud security!

This tool is a combination of REST and GraphQL API calls that writes all the settings we’ve established for the Organization to every repo in sequence. There are third-party tools which do the same, but I wanted to have ultimate control here of every setting, and if there were any weird work-arounds required for any setting, I wanted to be the one to do it (Note to author: Am I a masochist? Check this after presentation)

This Action uses a CSV file of repo configuration mutations to customize any settings that particular repos require that are non-standard. Every other setting that isn’t over-ridden by the mutex is standardized across the entire Org.

This came in very very helpful when we decided to implement ~45 auto-link references in every repo. Even though these are literally exactly the same references in every repo, they are configured per repo. GitHub assumes you have literally weeks to go into each Repo and click through the configurations, and I absolutely do not have that amount of free time, so I added a few functions to the GitHub Cop and the next time it ran, it deployed 45 references to 1500 repos, a total of 67,500 changes across a single Org.

This Action runs nightly, and cleans up the streets (like Batman!). Some of the other tools are ones which are only used for the migration, but this tool is incredibly valuable to keep new and existing repos standardized and securable, even with immense sprawl.

Tool: Lambda that Cop!

https://github.com/KyMidd/GitHubCopLambda-EventDriven

(Created a github app that notifies an AWS lambda that kicks off GitHub Cop to target only a single repo)

The GitHub Cop is effective, and will remain so, but it’s unfortunately pretty slow — it takes a long time to remedy 1500 repos! Even if we trigger the GitHub Cop when a new repo is created, it still might take some time to work through it. That’s not ideal.

Our GitHub TAM (hey Jon Jozwiak! (Joz, wee, ack)) turned me on to the concept of GitHub Apps. They are installed in your GitHub Organization, and are allowed to watch for specific events and send webhooks based on those actions. I created a new GitHub App for the GitHub Cop and told it to watch for newly created Repo events. When it sees a new repo is created, it immediately sends a webhook to an AWS API gateway I created.

This AWS API Gateway has a permanent public domain name, making it ideal as a target for some automation. It forwards the message to an internal lambda. That lambda validates the header hash added by the GitHub Action to make sure it’s a valid message. If yes, it extracts the repo name that’s encoded in the webhook, and triggers the GitHub Cop with a repo selection.

The GitHub cop spins up and, since Targeting mode has been engaged, polices only the single repo that it was asked to police.

This entire process takes less than 60 seconds. From a users perspective, they can create a Repo, stretch in their chair for a bit, look at 2 funny cat pictures, and refresh the page, and the repo is now populated with all the settings and permissions required for it to operate securely in our Organization.

Tool: GitHub Cop Runs out of API Tokens

Medium: https://kymidd.medium.com/lets-do-devops-building-an-api-token-expired-circuit-breaker-58a47aa632b7

(the Action runs out of API tokens, some repos are skipped until token bucket is refilled)

The GitHub platform uses an API token bucket for each individual user. Each action is assigned a “cost”, and each user is given a specific number of tokens to spend per time period, which in GitHub’s case is generally an hour. Then your API token wallet is refilled, and you can go back to spending stuff.

I built this tool from scratch, and didn’t initially consider this. I was sure my janky script couldn’t be faster than the platform. Wow, I was wrong. Go me.

My quick fix hack was to wait a significant amount of time between each repo — say, process a repo, then wait 3 minutes before doing the next one. That works, but extends the runtime for this script out a HUGE amount. That’s not workable.

I decided to do it better — I found that the token budget you have remaining is provided to you in the http header information for every request to GitHub’s platform. So I built a function that detects the budget remaining, and call that function ahead of every repo policing.

If our token wallet has fallen below a specific threshold, we wait for 1 minute and then check again. Repeat forever until our wallet has been refilled by the github token fairy. When that’s detected, we continue.

That works exceptionally well. However, the GitHub Cop still takes almost 10 hours to run through all our repos! That’s too long, let’s work the problem.

Tool: GitHub Cop takes more than 10 hours to run sequentially

(Updated github cop to split work between two builders)

We have a ton of permissions and configurations we want our repos to have. Even just reading all the configurations we want to set to see if they match up with our baseline takes 20 or so seconds, and when you multiply that by 1500 repos, that means a single run of the GitHub Cop takes over 10 hours. That doesn’t work great for us — even nightly, a 10-hour job takes too long. And image how poorly it’ll work as it scales?

But well, Actions can shard work over many builders, right? What if I put the workload over 128 builders (the max Actions supports)? Let’s do it!

Well, that failed spectacularly. The issue with this approach is that while GitHub has an overall API token budget limitation, there are also individual service limitations. That means that more than 90% of my calls to GitHub fail, because the GitHub platform feels that I’m DoS’ing them. Oops, sorry GitHub.

I found the max I could put this workload onto was 2 builders without trigger individual service thresholds. That’s a bummer — I’m splitting the time in 2, rather than splitting it by 128, but still a significant improvement.

Summary

Hey, we did it! During this talk we went over the enormous, gigantic problem set that is moving where your code is stored. Then we went over a few of the specific problems I encountered during this migration, and how I solved them with process and tooling.