🔥Building a Teams Bot with AI Capabilities - Part 3 - Delegated Permissions and Making Lambda Stateful for Oauth2🔥

aka, "do you remember me?"

This blog series focuses on presenting complex DevOps projects as simple and approachable via plain language and lots of pictures. You can do it!

These articles are supported by readers, please consider subscribing to support me writing more of these articles <3 :)

This article is part of a series of articles, because 1 article would be absolutely massive.

Part 3 (this article): Delegated Permissions and Making Lambda Stateful for Oauth2

Part 4: Building the Receiver lambda to store tokens and state

Hey all!

During the last two articles, we talked about how to get started building a Teams Bot - we built the manifest, registered the Bot resource, and linked it to an App Registration. That App Registration contains all sorts of wonderful permissions that we need to use in order to build conversation context and operate as a bot.

However, all the permissions are set as Delegated - that means that the Bot can’t do those things itself - it has no rights at all, since all the permissions are “Delegated” (vs “Application” permissions).

I talked to our Azure admin about just granting the Bot Application permissions to:

Read all Channels

Read all Messages in any channel

Read all private chats

And he just laughed and laughed. Granting a static permission to a bot to work like that would be bizzare - that’s way too many permissions! And if someone was able to steal the Client ID and Client Secret, they could exfiltrate absolutely all data from our Teams.

Thus, delegated permissions.

Lets talk about what delegated permissions are, and then talk about how the changes we need to make to our Receiver lambda (that is obviously stateless, it’s a lambda), to operate in a stateful way.

Don’t understand why our Receiver lambda needs state? Well, read on! It’s all about the OAuth2 token delivery safety mechanism in Azure

If you’d rather skip right to the code, this tool is available and open source. Please, go build!

Oauth2 Tokens in Delegated Access - Theory

So, Delegated Permissions. That’s cool, I don’t care if we use a User’s token or the App’s token, right? The flow is a little different, and we’ll call out an item that makes a pretty big change for us.

First of all, Users have to do something to issue a token. They’ll need to visit an SSO page for your organization and click an account to login as. Teams makes that pretty easy using a “Card” with a button, that a user will need to click in order to take them to the SSO page - that page is hosted by Microsoft (or your 3rd party SSO provider).

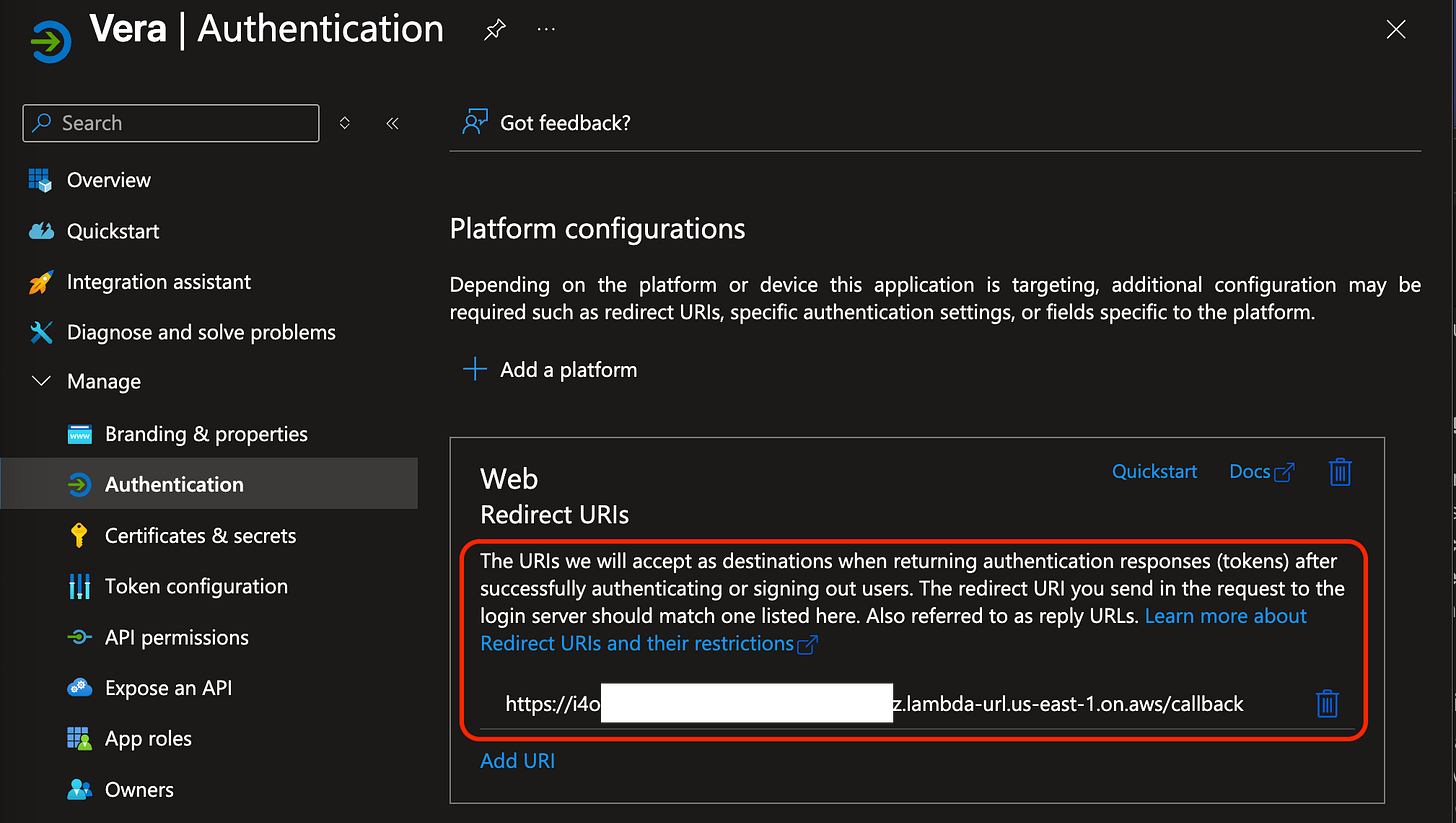

Now, when we do that, we don’t magically get a token. Instead, the Microsoft App Registration resource, and the Microsoft token issuing infrastructure, POSTs a token to a permitted URL.

Do you see the issue yet? We’re running a LAMBDA, that executes once for every inbound connection, and in order to use a Delegated Token, we need to have a SECOND inbound connection that carries the token.

That fundamentally will not work in a stateless implementation

So we need to build some state - for two distinct purposes.

Stateful “Conversation” DynamoDB Table

First, when we receive the initial webhook, it contains the “tag” message where the user triggered the Bot. If we don’t have a token available for the user, or if that token is expired, we know this iteration of our lambda can’t proceed, but we need to store the conversation for the next run, when the user hopefully authenticates to SSO and sends a token to our lambda.

These conversations aren’t particularly sensitive - they contain the user’s AzureAD/Entra object ID, and the text of the message that triggered the bot, but documents are linked, and any contextual conversation information isn’t included, so there’s no real impetus to encrypt this, but you do you if it gives you good security feels.

This storage operates like a queue - we store it and then when receiving the token, we “process” it and immediately delete it after using.

Stateful “Token” DynamoDB Table

Second, we don’t want the user to have to SSO for every single message. That’d be crazy, especially because the token is valid for around an hour (60-90 minutes, randomly). So when we receive a new token, we need to store it in a stateful way, so we can use it on the next triggering from that user.

When a user triggers the bot, we need to read the list of stored tokens to see if any match the user that’s triggered the bot. If yes, we’ll check if they’re expired, and if they are, we throw it away and push the “card” to the user so they can authorize a new token.

Tokens are particularly sensitive - they let anyone who obtains it to operate as if they are the user, and do any of the authorized actions, which in this case is to read their private messages and all user data for channels they’re in - not ideal. So we need to encrypt this data. I implemented encryption using a KMS CMK (an AWS-hosted Client Managed Key (CMK) from the Key Management Service (KMS)). This means that the data is strongly encrypted - AES-256-GCM, suitable for HIPAA, HITECH, and FISMA/FedRamp.

I want to be as efficient as possible to operate quickly since the user is probably waiting on us in their Teams app, so we pack the encrypted token and the conversation trigger the user sent (that triggered the bot), to the Worker lambda. I generally log the handoff between the Receiver layer (that receives conversation triggers and stores tokens) and the Worker layer (that builds the context and processes all the AI conversations), so we make sure the token is always encrypted at that handoff, and the Worker needs to access the KMS CMK again to decrypt the token to plaintext.

The token is never culled asynchronously - it’s only ever deleted when the following are satisfied:

The user interacts with the bot

The Receiver lambda finds the stored token is expired

In that case, the Receiver deletes the stored token for that particular user (primary key is the user’s AzureAD/Entra ID), and pushes a Card to the user so they can authorize a new token.

Routing Logic

You’ll notice there are a few different flows here.

If the user has never interacted with the bot before, or if the token is expired, we push a Card so the user can authorize a new Token issuance. On the SSO Token’s receipt, we store it in the dynamoDB Token table, fetch the stored Conversation from the table, and trigger the Worker.

If the user’s token is present and valid, we fetch the user’s encrypted Token, pack it with the Conversation webhook directly, and trigger the Worker.

Notably, the Receiver handles all this logic. The Worker is only triggered when we’ve validated that all the requisite data is populated for us to work the conversation.

Lets Code It

Okay, theory is well and good, but lets build it.

First, lets build the Conversation table. This caches Conversations if there’s an invalid or missing Token from the user. We set this as a small, low volume table (lines 9 and 10 set the compute units).

We set the “hash_key” (required) to be the only attribute that’ll need to be unique and present on each read/write - the aadObjectId, that identifies a particular user’s conversation.

Note there’s no “contents” field created here. DyanmoDB is really cool here - it doesn’t require non-key fields to be pre-defined when creating the table. The schema is flexible. #Neat

We do set the TTL field (optional) called deleteAt, which can be used to delete the tokens. We do populate this in our code, but we handle all cleanup via code paths directly, I’m not clear if DynamoDB can do this itself asynchronously of our application.

If you know, please tell me!

| locals { | |

| # This is the name of the DynamoDB table | |

| conversation_table_name = "VeraTeamsConversations" | |

| } | |

| resource "aws_dynamodb_table" "conversations" { | |

| name = local.conversation_table_name | |

| billing_mode = "PROVISIONED" | |

| read_capacity = 20 | |

| write_capacity = 20 | |

| hash_key = "aadObjectId" | |

| attribute { | |

| name = "aadObjectId" | |

| type = "S" | |

| } | |

| # Store entire conversation event as a JSON string with name conversationEvent | |

| ttl { | |

| attribute_name = "deleteAt" # Epoch time in seconds, needs to match token validity | |

| enabled = true | |

| } | |

| tags = { | |

| Name = local.conversation_table_name | |

| } | |

| } |

Next, the Token table. It looks almost exactly the same. We also use the hash_key here of the aadObjectId, since both the webhook and the Token come in with the user’s ID on it, and it works great for identifying ownership of these items.

| locals { | |

| # This is the name of the DynamoDB table | |

| token_table_name = "VeraTeamsTokens" | |

| } | |

| resource "aws_dynamodb_table" "tokens" { | |

| name = local.token_table_name | |

| billing_mode = "PROVISIONED" | |

| read_capacity = 20 | |

| write_capacity = 20 | |

| hash_key = "aadObjectId" | |

| attribute { | |

| name = "aadObjectId" | |

| type = "S" | |

| } | |

| # Store accessToken from oauth2 server as string with name accessToken | |

| ttl { | |

| attribute_name = "deleteAt" # Epoch time in seconds, needs to match token validity | |

| enabled = true | |

| } | |

| tags = { | |

| Name = local.token_table_name | |

| } | |

| } |

Next, we create the KMS CMK. I specify that this key should automatically rotate keying materials, a good security practice. I don’t think this is handled in my application - we should likely not rotate key materials. The worst case scenario here is a key is stored, and then the keying material changes, and the key can’t be decrypted, and the app would malfunction for that user for the hour-ish that their key is valid. Once it expired, it’d be regenerated, restored, and the app would work for them again.

If you’re building this at home, turn off key rotation

| locals { | |

| cmk_alias = "alias/VeraCmk" | |

| } | |

| # Create the KMS key | |

| resource "aws_kms_key" "cmk" { | |

| description = "Key for encrypting access tokens between Vera lambda layers" | |

| deletion_window_in_days = 10 | |

| enable_key_rotation = true | |

| tags = { | |

| Name = "VeraCmk" | |

| } | |

| } | |

| # Optional alias for easier reference | |

| resource "aws_kms_alias" "cmk_alias" { | |

| name = local.cmk_alias | |

| target_key_id = aws_kms_key.cmk.key_id | |

| } |

Summary

I had intended to cover the entirety of the strategy, resources + implementation, and application code that utilizes it, but we’re already too long, and that would be huge, so lets call it here.

This article showed why we’re pushing so hard to use Delegated Access permissions, and how Azure implements Delegated Tokens. We talked about why that method is inherently incompatible with stateless applications, and covered how we’re going to establish stateful behavior for our stateless lambda application.

Then we walked through the resources we’ll create to make that behavior work, as well as the terraform configurations to build those resources.

Next up, we’ll talk about the application changes we’ll implement to make the Vera Teams app support state, Delegated OAuth2 tokens from the user, the routing logic we’ll use to make it all work.

Hope it’s all going great out there. Hello from AWS ReInforce in Philly, I give a talk on Tuesday, and I’m writing this from a conveniently empty chair in a side hall.

Good luck out there.

kyler